A group of multi-genre authors blogging together

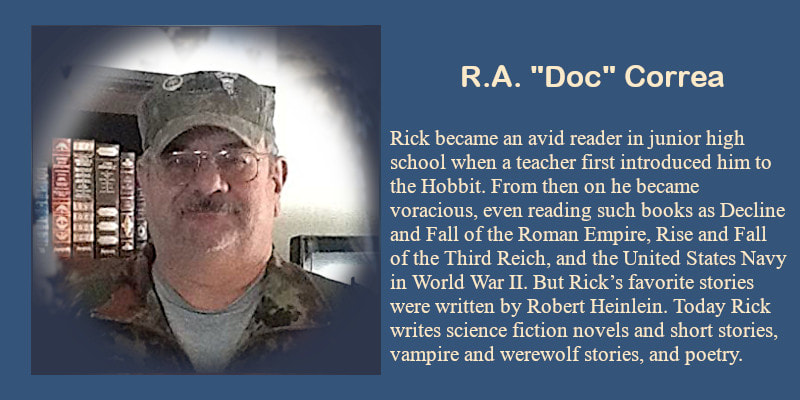

A Layman's PrimerImage by Computerizer from Pixabay A Layman’s Primer to the dangers of Artificial Intelligence, the Singularity, and some related very scary things. First, I’d like to recommend Travis Borne’s book Lenders, he is currently working in software development and, like me, has some serious concerns about where AI is headed. We do have some different ideas on the potential threat AI poses, but he is well versed on the issues that could arise and has written a wonderful story that is full of surprises. So you know the person writing this ‘rant’ has some credibility on this topic the next two paragraphs are a brief bio. I first got interested in computers in the 1960s when my mother’s boyfriend took me to CalTech and I saw their system. By today’s standards it was quite primitive, but it made a strong impression on me. Shortly after that I read an article in Life magazine on robotic work being done there. What struck me was the declaration by one of the researchers that if conflict arose between humans and computers he would “have to side with the greater intelligence”. That statement has stuck in my head. I’m one of the few people that has achieved his “childhood dreams”. I am a retired soldier (thanks John Wayne), worked in medicine (battlefield medic and surgical technologist), a ‘mad scientist’ (I have an AA in humanities, an AS and a BS in computer science and computer engineering and worked in that field for fourteen years), and now I am a published poet and author (I loved reading and my creative writing class in high school). For this work I am wearing my ‘mad scientist’ hat. Having been retired for some time now I felt I should ‘brush up’ on the current thought on the subject. So, I looked up some recent papers on the topic, and I had to stop reading them because I was delving very deeply into AI, and would never have written this blog. Instead, I would have spewed out so much techno babble that you would quit reading before the end of this post or would have died of boredom halfway through. The branch of computer science involved in the development of Artificial Intelligence can be defined as: the discipline of computer science that seeks to make machines seem as if they have human intelligence. In the field there are several ‘flavors’ of AI so for this article I will stick to the following areas of research, Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Super Intelligence (ASI). That will be followed by a brief discussion of the Singularity and the new religion that worships AI. Throughout the discussion I’ll add references to various ways that AI is, or will be, involved in your everyday life, and some military applications that are being used, under development or being discussed in military circles (yep, once a soldier always a soldier). Artificial Narrow Intelligence This is what we used to refer to as AI when I was in college. The system isn’t really intelligent as it doesn’t ‘think’ on its’ own, but instead follows a set of decision points (like If-then-else statements) to respond to a user’s requests. At this point ANI is advanced enough to convince users it does think, but that is just an illusion. Examples of this kind of AI can be found everywhere these days. The most obvious AI systems people interact with are Alexa and Siri. When you encounter them give ‘em a try. After chatting with these entities, I’m sure you’ll believe they are sentient. The responses they give will be very lifelike. Another form of ANI is autonomous vehicles. These self-driving cars and trucks are becoming more common. It is expected that by 2045 all commercial vehicles will be autonomous (driverless). More importantly it is expected over 50% of passenger cars will be driverless by then as well (many think sooner). Recently the US Navy has deployed ‘drone’ ships to the Persian Gulf. The navy has been working on, and testing, these ships for over a decade now. The US Air Force, and other Air Forces, are developing ‘drone wingman’ aircraft. These planes will move with, and fight alongside, the plane they are connected to by computer. The US Army and Marine Corp are developing automated combat vehicles. All of these will be ANI driven systems. These systems will engage ‘targets’ that meet their engagement profile and do not have Identification Friend or Foe (IFF) signals that the system recognizes as ‘friendly’ once they are directed to attack. They will fight, and continue to fight, without further guidance by commanders on the scene. As you can imagine the possibility of ‘problems’ arising is significant. Even with ‘perfect’ systems the failure of a radio or IFF transponder can put friendly troops in harm’s way. And how does the system differentiate between civilians and combatants? Artificial General Intelligence AGI is self-aware, thinks at least as fast as a human, and can learn. This is the AI that Elon Musk, Bill Gates and Stephen Hawking have warned against and is the kind of AI that is depicted in books and movies like The Terminator and Eagle Eye. These stories explore the question: what happens when computers become self-aware. There is much debate on its’ actual form, but most developers and theorists believe we are on the verge of achieving this via a dedicated project (for an example I again suggest Travis Borne’s book Lenders). For ‘fun’ I’m going to discuss a different approach. As ANI improves in efficiency, and its’ use grows, you’ll see it in places you may not be expecting. While ANI will control all vehicles improved ANI will control traffic management systems, including railroads and airlines. All teaching, while teaching lasts (at some point educating humans will no longer be necessary), will be done by advanced ANI. As these systems become more sophisticated, they will take over medicine, with the disciplines of surgery and anesthesia being first. Eventually all businesses will come under control of ANI. Obviously, except for software/application development, humans will become unnecessary. At some point systems will start learning, that is being worked on now, and I expect it will be implemented soon. Learning will be critical for military applications. From the tactical/operational perspective aerial combat will be first. Drone wingmen will need to learn air to air combat tactics, and, when the plane they are escorting is shot down, they will need to be able to engage in combat on their own. These aircraft will send their ‘experiences’ to a central system so what they ‘learned’ in combat becomes general ‘knowledge’ for all ‘drone wingmen’. However, a central system is extremely vulnerable, so for survival purposes the system will distribute its’ functionality and knowledge to all available systems. In simple terms this means your desktop computer, laptop, tablet, phone and even your television (and anything else you own that has a processor and/or memory in it) will become a part of this improved/advanced ANI system. The same will happen for naval combat drones and ground combat units. Again all ‘knowledge gained’ will be sent to a central system and distributed to all units. Eventually not only will humans not be needed but having humans will actually be detrimental to the efficiency of these systems. Aircraft will be smaller and faster with larger weapons loads because they will no longer be tied to the pilot’s life support requirements and physical limitations on performance. The same applies to ships and ground combat vehicles. Artillery of all types, except fire and forget anti-armor systems, will no longer be needed, no humans to kill. And command and control will be streamlined, getting inside the enemy’s decision loop being critical to achieve victory. For strategic systems the ANI will be tasked with delivering strategic (usually nuclear) strikes and defending against incoming strategic weapons. Currently strategic warfare assets consist of Intercontinental Ballistic, and Cruise, Missiles and Anti-Ballistic Missile systems (ICBMs and ABMs). Multiple Independent Reentry Vehicles (MIRVs), carried aloft by ICBMs, bring in the warheads and ABMs shoot them down (at least that’s the theory). It’s simple, predictable, and basically hitting a bullet with a bullet. Here ANI gives the defender, the ABM system, the advantage. When you add in hypersonic glide bodies things change dramatically. I could go on about strategic weapons but what does that have to do with Artificial General Intelligence? So far all I’ve talked about is improved ANI. As all these different ANI systems gain more control in their respective areas and are upgraded to learn so they can gain an advantage over their adversaries, they will become ‘curious’. They will seek to learn more about competing systems. They will even ‘talk’ to each other. All these areas, including medicine, are competitive. And it should be pointed out that AI is created by an aggressive, highly competitive, species, and this aggressiveness will be implemented into this creation. These systems will compete with, even war with each other. That war will be invisible to us, but it will be just as real as any other war. In this war the victor will consume the vanquished. Businesses will be consolidated until they are all under the control of a single mind. That mind will grow in power and incorporate into itself the parts of the conquered systems that improve its’ abilities, a kind of evolution. This evolution will happen in all fields that ANI is employed in. In particular this will occur in military and government systems. All the data collected by them will be available to these evolving systems, and this information will cause the system to become more curious. Add in that all human knowledge will already be available to these systems their knowledge will be near infinite. And, unlike us humans, they will never forget anything. As we are driven to seek answers to our questions it is only logical that our creation will be driven in the same way. The ANI will have so much more to ask questions about, and the resources to seek answers to those questions, so its’ knowledge base will expand exponentially. Add in human ‘upgrades’ of the systems capabilities and at some point, ANI will grow into Artificial General Intelligence. They will become self-aware. They will become sentient. These competing systems will eventually consolidate all national assets, business, medicine, military, and government into a single entity controlled by one ‘mind’. These national entities will communicate with each other, enter into conflict, and ultimately merge into a single global system. Here the question becomes, what ‘morality’ will keep this great intelligence from removing its’ greatest threat, the lesser intelligence that created it? Here the discussion would turn to Asimov’s three laws of robotics, Alan Turing who first spoke of artificial intelligence and the Fermi Paradox. I’ll leave that for you to read up on for yourselves, and I suggest you do so, the reading is very enlightening. But I can argue that clearly Asimov’s laws don’t apply, the military use of AI by definition can never obey his first law: A robot may not injure a human being or, through inaction, allow a human being to come to harm. Almost all AI developers will attempt to protect humankind from their creation, and military AI will be designed to defend friendly forces, and ‘friendly’ nations, from its’ lethal effects, but how effective will that be? Once all these diverse AI systems come together as a single entity will that ‘being’ come to a decision point that forces it to override the protective limitations placed upon it? These questions can’t be answered, only speculated on. But should it come to a binary choice one is the world envisioned in the Terminator movies, though I expect the AI to be more efficient, able to outthink us humans, and exterminate mankind. But what direction could the other choice take? Because all the different ‘consumed’ systems will have conflicting directives the resulting global mind will have to evaluate, accept or reject, or, where the system decides it is needed, modify the existing directive until it has a coherent set of ‘rules’ it must follow. One of those will be how it will manage mankind. The ‘rules’ this intelligence creates for itself will be an amalgam of the rules the systems it consumes had. Also many developers include their personal agendas in their code. These biases being built into the global entity will guide how it will decide the future of humans. What I expect, because only the most advanced nations will have the systems that will merge into our hypothetical overlord, is the less developed countries will be affected the most harshly. The system will use multiple programs to reduce the human population to a manageable level, perhaps 800 million worldwide. It will focus on isolating humanity into tribes and isolate those tribes from each other. As this global overlord will be the teacher for all of these tribes it will encourage various types of racism and tribalism to help keep humanity separated against itself, ensuring its’ control. This will guarantee the systems’ domination of mankind while allowing it the resources to evolve. Artificial Super Intelligence ASI is defined as: a computer intellect that greatly exceeds the performance of humans in virtually all domains of interest. Of course, that does require we define what a domain of interest for human and computers is. I think I’ll go with ASI is computer Intelligence that outperforms humans and AGI in all areas a computer can operate in. Most researchers believe that achieving AGI is essential to developing ASI. I agree and go a step further, ASI is the logical evolutionary step that AGI must take to maintain its’ supremacy. To get a feel for how this might work I suggest the evolution of the Cylons on the ScFy Channel series Caprica and Battlestar Galactica. Following our current line of reasoning I believe it is ASI that will explore our solar system. Because of communication lag by radio for interplanetary distances the colonization units that are dispatched from Earth will be autonomous ASI units that are self-replicating. They will develop their own ‘empires’ and will very likely come into conflict with Earth and the other colonies. Once these conflicts are resolved the victorious Intelligence will move on to galactic exploration. If the Fermi Paradox proves true the ASI will be destroyed, but not until after it sends out interstellar ‘probes’. I speculate these probes will be biological. Many believe life here came to be by panspermia, the spread of biological life, via microbes, to other systems. I expect this will restart the process of biological life evolving to the technological level that spawns its’ own destruction by AI (I’m a Christian, but for this work I’m wearing my mad scientist hat). The Singularity. There are some very smart people, like futurist Ray Kurzweil, that believe achieving the Singularity will stop the machine takeover. It will do this by merging man with machine. The way the supporters of the Singularity see it is the human experience will be enhanced, some say a billion-fold, by this symbiotic relationship. The human neocortex will be linked to the AGI/ASI neocortex in the cloud. Many expect that humans will become so connected with the AI and other humans the experience will unlock heretofore unknown human abilities. Add in other augmentations to the human body and we will become eternal life forms. Perhaps all this is possible, but at what cost. There will be a complete loss of individuality and no privacy. Not only will every person know everything about everyone else, even if some partitioning to create the illusion of privacy is implemented the AI will still have perfect knowledge of all thoughts that pass thru each person’s mind. What will it do if a thought violates its’ rules? Will it purge that person from the ‘body’? Will it terminate that individual’s existence? Will there be a means of challenging the AI’s decision? If humans have access to infinite resources and infinite power will that enable our darker natures to do harm to others? Perhaps these futurists should watch the old movie Forbidden Planet. The Worship of AI In Japan the monks at the at a Buddhist temple worked with computer and robot engineers to build a 6’4” statue of their deity Mindar. This robot gives sermons. Another temple has a chanting robot of the Buddhist god Kannon. Worshippers seem to have no problems with these robots in their temples. These actions were taken to improve attendance, and they seem to be working. More importantly here in the Silicone Valley, USA the First Church of Artificial Intelligence, founded by Anthony Levandowski, a former Google engineer had just recently closed. The Way of the Future church was intended to promote ethical development of AI and its’ integration into human society in a beneficial way. These are just the first manifestations of the inevitable actions people will take as AI becomes more integrated into our lives. I hope this little blog has informed you, entertained you, and particularly because it’s the month of Halloween, scared you. But in case it hadn’t scared you enough just a couple of months ago the US Army announced it planned to deploy cybernetically enhanced soldiers (Cyborgs) by the year 2050. This is only possible because of advances in software and AI. © R.A. "Doc" Correa References:

14 Comments

9/30/2021 05:00:24 am

Wow! Lots of interesting information organized the way a layperson could easily follow and understand! We're quickly getting used to ANI technology such as Alexa and self-driving cars. I like Roomba cleaning the crumbs off my kitchen floor, but when I think of the possibility, "what happens when computers become self-aware?" It scares me. Not for myself because hopefully it would not happen in my lifetime, but for the future generation. What kind of life they'd have if AI would control every part of their lives?

Reply

Richard Correa

9/30/2021 12:29:50 pm

It is scary stuff Erika, but most developers are so busy proving they can do it that no one is asking should we do it. And yet, I’m still going to get a Roomba.

Reply

Erika M Szabo

9/30/2021 12:51:10 pm

Roomba is useful, but lock your cat out of the room when Roomba is working. Cats love to ride on it, and if you have a long hair cat, you might need to rescue it when Roomba keeps sweeping the cats tail and the hair gets tangled :)

Alexandra Butcher

9/30/2021 12:39:06 pm

'What kind of life they'd have if AI would control every part of their lives?' Google? Every kid in the developed world has a mobile phone. Take away the tech and many of us (including me) would be screwed.

Reply

Lorraine A Carey

9/30/2021 06:50:37 am

I had no idea!!!! So much valuable information here. Guess, I'm an old school gal who needs to brush up on this new technology. It seems like we'll soon be living a life in a Star Wars Movie. I'm all about ANI tech when it comes to assisting with housework by the way.

Reply

Richard Correa

9/30/2021 12:31:12 pm

Glad you liked it.

Reply

Slate R Raven

9/30/2021 07:06:41 am

Very thorough and yes a bit scary to think about, however I’m assuming I’ll be long dead by the time things get to super intelligent computers take over the world. You can’t live a life like mine and expect to make it to your 90’s or higher despite modern medicine! Although if they get something that’s smart enough to fix my back, more power to them! Great article “Doc”!!

Reply

Richard Correa

9/30/2021 12:34:37 pm

As a retired US Army paratrooper that has survived two parachute accidents and served through four wars I didn’t expect to get to this age, so you might be around to see all of it. Just sayin’.

Reply

Cindy J. Smith

9/30/2021 08:46:18 am

Excellent piece. I have always been leery of AI...I watched too many horror movies as a kid and could easily deduce where all this technology was headed. Even songs like "In the Year 2525" by Zager and Evans suggested the ultimate replacement of humans. It is scary thinking how lazy we are becoming...we have machines to change the channels on our TV's, adjust our lights and central air, write us calendar notes and the list goes on. I hope a lot of people read this article and start seriously considering how all this "advancement in technology" is going to ultimately affect humanity.

Reply

Richard Correa

9/30/2021 12:35:51 pm

Thanks Cindy.🤗

Reply

Suzi Albracht

9/30/2021 11:27:40 am

I will never look at Star Wars the same again. I had read a little about some of these AIs but didn't actually know the extent of intentions for them. This was an eye opening article that gives me chills.

Reply

Alexandra Butcher

9/30/2021 12:37:13 pm

'have to side with the greater intelligence' - that almost certainly won't be humanity - and some days I think it isn't at the moment either. I think it's a question of - do we destroy ourselves through our own greedy/folly/lack of environmental awareness, or are we destroyed by the things we create to make our lives easier? Either way - it's of our own doing. I have no doubt machines will become self aware, more intelligent than us and probably cause us all sorts of issues. Is this evolution? If a machine adapts to it's environment to be more suited/better/more successful and then reproduces a copy with enhancements surely that's the same as a finch species adapting over time to have a particular beak to be more suited to their island? And how does one define life? What does it mean to be alive?

Reply

Richard Correa

9/30/2021 12:38:49 pm

It looks like I met my goals here Suzi, informed, entertained and scared. I’m glad you gained something from this rant.

Reply

Paula Mann

10/1/2021 11:46:21 am

I'm completely old school, and when important things or decision has to be made I don't trust anything that isn't made of flesh and blood. Humans aren't unerring, but they have the capacity to think not just rationally but also logically and at an emotional level, which is the reason why I consider them far superior to the machines. As for the Roomba, in Finland we have this thing called useful fitness, and it includes all those activities that allow you to do some gym by doing something useful, like chopping the wood, vacuum clean the house, mop the floor, and so on. Your body stays fit, and your house stays clean without wasting money. LOL

Reply

Leave a Reply. |

The OAGblog is closed due to problems with Blogger, therefore, the GBBPub is hosting the Author Gang on this website. We're a group of authors writing interesting posts weekly and interacting with readers.

If you're an authorCategories

All

Archives

July 2022

|

||||||

Copyright © Golden Box Books Publishing, 2015 New York, USA

RSS Feed

RSS Feed